In an era where AI verification, real-time assessment, and digital transparency are becoming the norm, the days of hiding behind false credentials, borrowed expertise, and intellectual theft are numbered. Individuals can no longer fake competence, steal credit, or manipulate perceptions without consequence. Technology has shifted the playing field – your actual abilities, cognitive depth, and adaptive intelligence can now be measured, validated, and exposed in real time. For centuries, people have thrived by copying, imitating, and presenting themselves as more capable than they truly are. They have hidden behind borrowed insights, repackaged ideas, and surface-level knowledge, using deception to navigate academia, business, and cutting-edge industries like AI and biotech. However, with the rise of sentient intelligence, AI-driven verification systems, instant biological and intellectual assessment, and blockchain-backed credentialing, the mismatch between what one claims and what one actually knows has become quantifiable. The world is undoubtedly shifting toward a tech-verified meritocracy, where only those who truly possess knowledge, skills, and originality will survive.

Why Intellectual Thieves Are Vulnerable

Those who steal knowledge, credits, ideas, and credentials to make themselves look good are setting themselves up for failure. AI-driven verification, real-time performance assessment, and adaptive intelligence systems are making it impossible to sustain the illusion of expertise. With blank endorsements, they may temporarily deceive others, but they are highly vulnerable to exposure, manipulation, and eventual obsolescence. AI detects inconsistencies in real time, ensuring that stolen knowledge cannot be applied dynamically under scrutiny. Many fraudsters rely on memorised scripts or plagiarised content, but AI-assisted hiring platforms and adaptive questioning models quickly differentiate between surface-level recall and deep understanding. When placed in high-pressure problem-solving situations that require spontaneous thinking, copycats inevitably fail. AI also cross-references vast datasets – including research papers, patents, code repositories, and written content – making it impossible for someone to claim ownership of borrowed work. As AI-powered plagiarism detection and similarity analysis continue to advance, false experts are increasingly exposed as impostors, their credibility irreversibly destroyed. Beyond detection, intellectual thieves are inherently static in their knowledge, making them incapable of adapting to evolving systems. AI-driven industries such as algorithmic trading, cybersecurity, and machine learning require continuous innovation. Those who rely on imitation struggle to keep up, rendering them obsolete the moment systems advance. Furthermore, copycats are particularly prone to manipulation, as they lack a deep understanding of the knowledge they claim. They can be easily misled by misinformation or subtle errors, exposing their incompetence when faced with expert-level scrutiny. The coming AI-verified world will not only expose false expertise – it will actively eliminate those who engage in deception. The future belongs to individuals who develop real, adaptive intelligence, continuously upgrading their own features and functionalities rather than relying on imitation.

Technical Approach of AI-Driven Verification & Fraud Detection

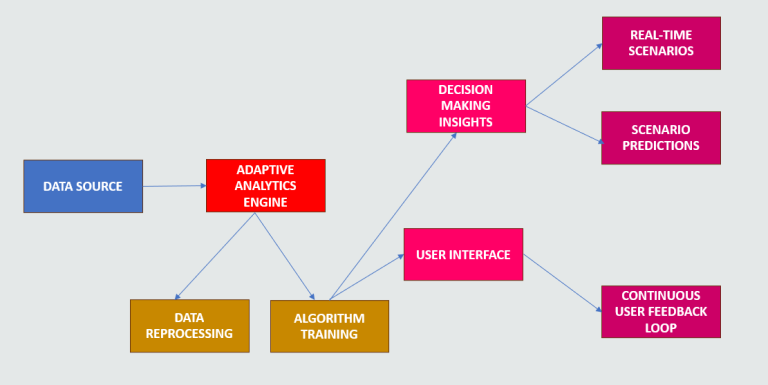

AI-Driven Knowledge Validation Systems. To enforce intellectual authenticity, AI utilises multi-modal verification architectures, which integrate natural language processing (NLP), knowledge graphs, and behavioural biometrics. These systems analyse patterns in speech, writing, and problem-solving to determine whether an individual truly understands a subject or is simply regurgitating stolen knowledge. These systems do not just check whether an answer is correct; they analyse semantic meaning, logical coherence, and depth of thought. By measuring factors such as logical coherence, response evolution, and real-time cognitive adaptability, AI can calculate an individual’s Knowledge Authenticity Score, ensuring that only those with genuine expertise pass validation. To enforce intellectual authenticity, AI integrates multi-modal verification architectures, which include Natural Language Processing (NLP), knowledge graphs, and behavioural biometrics. The core component Knowledge Authenticity Score (KAS), calculated where S(X, Y) measures semantic similarity and logical validity between a person’s response and verified knowledge sources. AI also employs adaptive challenge-response testing, where it generates progressive, evolving challenges based on real-time responses. Unlike static exams, AI creates a Problem-Solving Tree (PST), ensuring individuals are tested on their ability to synthesise information rather than memorise facts.

Deepfake Detection for Intellectual Theft & Fraud Prevention. Many fraudsters attempt to bypass verification using AI-generated content, impersonations, or deepfake-assisted presentations. AI leverages Generative Adversarial Network (GAN) Discriminators to distinguish between authentic and synthetic data. The GAN framework follows the equation where D(x) represents the probability that a given work is authentic, based on neural network feature extractions. AI models trained on large datasets of real vs. synthetic data can achieve almost 100% accuracy in detecting AI-generated content. Within this framework, AI employs real-time skill assessment simulations, where users are subjected to progressive, dynamically evolving challenges. Unlike traditional knowledge testing, these simulations introduce adaptive questioning and deep problem-solving trees, exposing those who rely on surface-level memorisation. AI also utilises deepfake detection models based on GANs to flag AI-generated or manipulated content, preventing fraudsters from passing off stolen knowledge as original work.

Blockchain-Backed Proof-of-Skill (BPoS) Systems. To eliminate credential fraud, AI integrates blockchain verification into skill assessments. A Proof-of-Skill (PoS) mechanism cryptographically links an individual’s biometric markers and verified expertise into an immutable ledger. This ensures that only verified individuals can claim expertise, making fake credentials, impersonation, or stolen work invalid at the root level. To reinforce identity verification, BPoS models ensure that individuals cannot falsely claim expertise. AI-verified credentials, zero-knowledge proofs, and biometric confirmation mechanisms establish an immutable, tamper-proof record of true intellectual contributions, making impersonation impossible. These advanced verification frameworks collectively ensure that false pretense is unsustainable in a tech-verified world.

Tracing Authenticity Through Human-Generated Data

AI does not operate in isolation – it learns from vast datasets of human-generated content, making it increasingly effective at detecting authenticity, originality, and ownership of intellectual work. Through deep neural network modelling, historical pattern analysis, and blockchain-backed metadata tracking, AI can compare newly presented work against the entire history of existing human-created content to determine whether a person is the true originator or merely a replicator. One of the most advanced approaches in this area involves embedding data provenance into AI training models. Large Language Models (LLMs) and AI-powered detection tools utilise: Sequence Alignment & Semantic Analysis – AI scans content for deep structural and linguistic similarities, distinguishing between original expression and paraphrased duplication. Neural Pattern Recognition – AI cross-references writing style, sentence construction, and knowledge depth with previously stored datasets of verified authorship to detect inconsistencies. Time-Stamped Data Provenance – AI can leverage blockchain or cryptographic fingerprinting to track the original creation date of content, ensuring that only the true creator is credited.

The CPU Analogy: Why Performance Must Match Capability

A Central Processing Unit (CPU) serves as an ideal analogy for human intelligence, skill level, and expected performance. Just as CPUs are built for specific workloads based on architecture, core count, and processing speed, individuals also have cognitive limits, problem-solving capacities, and processing efficiency that define what they can truly achieve. A low-power CPU cannot handle high-intensity AI modelling, just as an individual lacking deep expertise cannot sustain high-level intellectual work under scrutiny.

Benchmarking: AI as the Ultimate Performance Tester. CPUs undergo rigorous benchmarking to validate their actual performance, testing processing speed, multitasking capability, and thermal stability. Benchmarking tools stress-test CPUs to determine if their advertised specs match real-world performance. AI now serves as a human benchmarking system, ensuring that claimed expertise aligns with actual ability. Just as overstated CPU specifications fail under stress testing, individuals who fake knowledge will fail AI-driven real-time problem-solving assessments, adaptive intelligence evaluations, and knowledge synthesis tests. Mathematically, CPU performance under benchmarking follows where P_true is actual processing capability, C_tasks is the number of computational instructions successfully executed, and T_execution is the actual execution time. A mismatch between claimed and real P_true indicates underperformance or fraudulent specification inflation—the same principle applies to human expertise when measured under AI-driven cognitive benchmarking.

The Problem with Copycats: False High-Performance Claims Fail Under Stress. Copycats who steal knowledge to appear competent are like low-end CPUs falsely reporting high clock speeds – they may pass surface-level checks but fail under intensive workloads. In computing, some manufacturers use Thermal Design Power (TDP) manipulation to show a CPU running at higher clock speeds than it can sustain. When pushed, these processors throttle down to avoid overheating, exposing their true limited capability. Similarly, individuals who borrow or steal credits / expertise rather than build it cannot withstand adaptive AI testing. AI-driven assessments measure:

• Response Variability → Can the individual adapt their answers to dynamically shifting challenges?

• Cognitive Load Handling → Does their mental processing break down under extended problem-solving sessions?

• Depth vs. Memorisation → Are they generating original thought, or just regurgitating information?

Like an underclocked CPU throttling down under stress, individuals with false credentials will fail when tested under real-world application scenarios.Overclocking and Self-Improvement: The Path to Sustainable Expertise. High-performance CPUs self-optimise through dynamic overclocking, power efficiency improvements, and real-time learning models. Similarly, genuine experts continuously upgrade their cognitive abilities through skill refinement, experience, and adaptive learning. However, just as reckless overclocking leads to voltage instability, overheating, and eventual system failure, individuals who try to artificially boost their perceived expertise without a solid foundation will eventually burn out, contradict themselves, or expose their own intellectual gaps. AI-driven human benchmarking evaluates where S_true represents actual expertise, K_depth is depth of knowledge, and A adaptability is the ability to handle dynamically changing challenges. If an individual fails these assessments, they are flagged as non-authentic, just as a CPU that fails performance consistency checks is considered unreliable.

The Future: AI as the New “System Stability Check” for Human Performance. CPUs are stress-tested under sustained workloads to confirm reliability. Likewise, AI-driven assessment tools will become the standard in education, hiring, and corporate evaluations, ensuring that only those with real, evolving, scalable expertise succeed. In a world where intellectual authenticity determines survivability, only those who genuinely enhance their capabilities will be recognised as high-performance individuals – just as only well-optimised CPUs are trusted for demanding applications.

AI Verification and Its Parallels to QMS & ISO Standardisation

Quality Management Systems (QMS) and ISO (International Organization for Standardization) standards have long served as verification frameworks for ensuring that products, services, and processes meet consistent, high-quality performance criteria. These frameworks provide structured evaluation, benchmarking, and compliance assessments, much like how AI-driven verification now validates intellectual authenticity, expertise, and originality in individuals and organisations.

AI Verification as a Digital QMS for Intellectual Competence. A QMS ensures that organizations maintain efficiency, meet compliance standards, and eliminate defects. Similarly, AI-driven verification acts as a Quality Management System for human expertise, ensuring that claimed intellectual capacity matches real-world capability. ISO 9001 (Quality Management) mandates that processes must be auditable and reproducible → AI ensures that expertise is measurable, verifiable, and not reliant on unverifiable claims. ISO 27001 (Information Security) ensures data authenticity and security → AI ensures that intellectual work is protected, traceable, and free from plagiarism or unauthorized modification. ISO 17025 (Testing & Calibration) requires consistent testing environments → AI benchmarking systems provide consistent, bias-free assessments of human cognitive and technical skills. If an individual’s claimed knowledge far exceeds what AI can verify through testing, plagiarism detection, and cognitive assessment, they are flagged as non-compliant (i.e., not meeting expertise standards).

AI Verification as an Intellectual ISO Standard. ISO standards ensure uniformity, repeatability, and measurable quality across industries. AI verification establishes a similar framework, where intellectual expertise is standardized, measurable, and benchmarked globally. Consistency: Just as ISO-certified products undergo regular audits, AI-driven expertise verification is continuous – ensuring individuals maintain ongoing competency, rather than relying on past credentials. Traceability: ISO requires documented proof of compliance → AI maintains immutable digital proof of skills through blockchain-backed credentialing. Performance Audits: ISO mandates third-party assessments → AI ensures expertise is validated through independent AI-driven testing, rather than self-reported claims.

The Future: AI as the ISO Standard for Intellectual Merit. In the future, AI-driven verification will serve as the de facto ISO standard for human expertise, ensuring that:Only those who meet real-time performance benchmarks are certified as competent. Intellectual merit is measured through live validation rather than static certifications. AI-driven standardisation creates a fair, bias-free evaluation system for human capability. Just as ISO standards revolutionised product and service quality, AI-driven intellectual verification will redefine how expertise is recognised, measured, and rewarded globally.

When Fakery Becomes the Norm, Replacement Becomes the Default

In controlled environments, one or a select few individuals may be replaced for security reasons, such as in cases of compromised intelligence or high-risk positions. However, when copycatting becomes the norms and widespread, the replacement process shifts from being an exceptional measure to a common, easy, readily accepted solution. Those who engage in deception become the easiest to remove because AI-driven verification systems flag them as questionable originals – people whose claimed knowledge and real capabilities do not align. When fraudulent expertise becomes common, replacing these individuals with more functional, verifiable versions becomes not just acceptable, but inevitable. AI-enhanced biometric scanning, cognitive mapping, and neurosignature tracking ensure that true experts possess a distinct intellectual signature that cannot be faked. Those who fail real-time adaptability tests become expendable, leading to an accelerating cycle of identity replacement, doppelganging, and systemic removal. Ironically, those who steal knowledge to gain power ultimately make themselves the most replaceable – their deception creates a precedent where expertise is fluid, and identity is interchangeable.

Intellectual Authenticity is the Only Protection Against Obsolescence

During my school years’ exams, I occasionally chose to share answers with classmates, knowing it would temporarily boost their grades. But when the stakes escalated – when their actions will impact their fates, well-being, or even survival – I refused. There are limits to what can be borrowed; beyond those limits, competence must be earned and originally produced. Enhancement of design, features, and functionalities is not an enemy nor it is optional – it is undoubtedly essential. This principle extends into the vast evolving AI-driven landscape, where intellectual authenticity is the only safeguard against replacement. While surface-level imitation may have once been enough to navigate academic and professional spaces, the future demands genuine capability, functional depth, and continuous self-improvement. AI-driven assessments, real-time output validation, and immutable contribution tracking will ensure that only those with true expertise remain competitive. In this new era, competition will persist, but it will be a healthy one – driven by merit, innovation, and real functional enhancements. Individuals and entities that thrive will be those who evolve, upgrade, and authentically build their expertise. Those who rely on imitation will not only be exposed but systematically removed from relevance. The AI-driven world will not just uncover deception – it will ensure that only those with genuine, adaptive intelligence endure.